Inworld Vision Pro Module

Inworld Vision Pro Module is a project showcasing Inworld's Unity SDK with Polyspatial on the Apple Vision Pro. This guide covers the basics of installing and getting started with the Inworld Vision Pro module.

Installation

To install Inworld for Vision Pro, perform the steps below:

- Ensure you are working in Unity 2022.3.20f1 with the Vision Pro module installed and enabled.

- Create a new 3D Unity project using the default template (built-in render pipeline).

- Install the following Unity packages:

- Download and install the latest version of this project using the .unitypackage available from the Releases tab.

- Build and install the project to your Vision Pro.

Note that this sample project includes a modified version of the Inworld Unity SDK version 3.1

Apple Vision Pro Setup

To set up the Vision Pro module, follow the steps below, in order.

Preparation

Before beginning setup of the Vision Pro module, perform the following two checks:

Eye Gazing not Accurate. If your eye gazing functionality is not accurate, click the physical Photo button located on the top left of your device four (4) times. Then, follow the instructions to re-calibrate eye tracking.

Unable to Adjust Volume. If you cannot adjust the volumne, open the control center or slightly rotate the Home button, then gaze to the volume. If you are unable to select the volume, check the Eye Gazing setting.

Requirements

The following are requirements for using the Vision Pro module.

-

Unity Pro License. For each serial number, only one license is supported per machine. Additionally, building the Inworld Unity SDK does not require pro license. So to proceed, you can just hand in your current pro license and use that to register on your mac silicon.

-

Mac Silicon Computer. Although Unity does not require silicon, the output of the Unity vision OS build is an XCode project, which requires XCode with visionOS support to run. This visionOS support can only be installed in a silicon computer (M1/M2/etc).

-

No Apple Developer Account Required. You do not require an Apple developer account to develop on the Vision Pro.

Debug in Unity

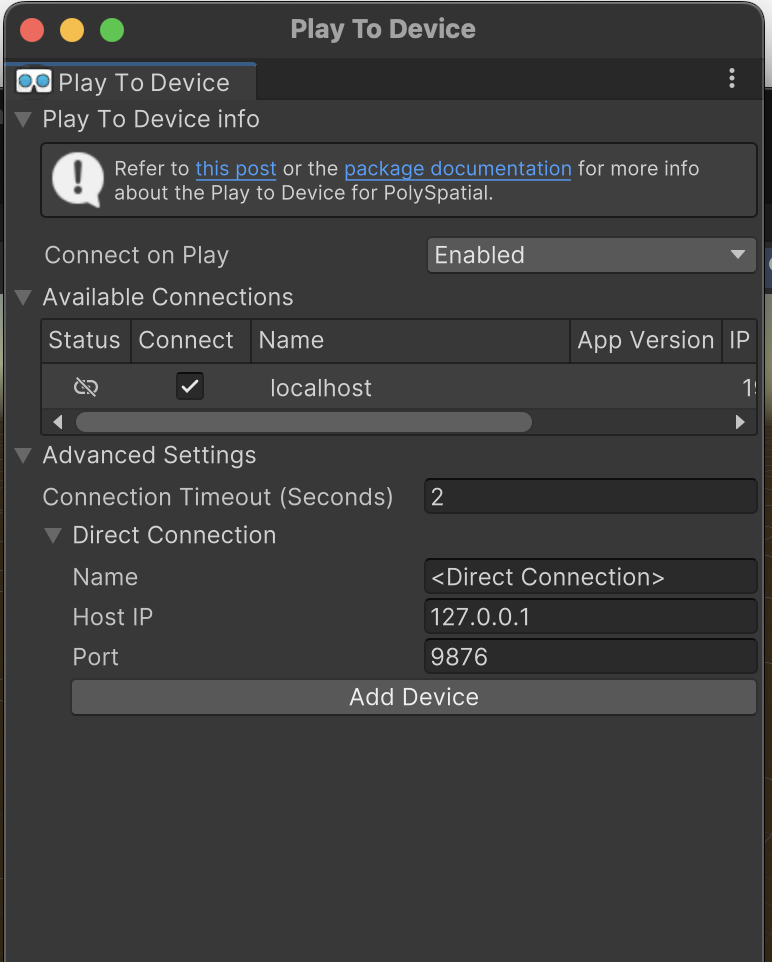

Download Play to device. Visit the following link for more information: link

There are two versions of Play to Device: either Simulator or Real Device.

If you wish to test in Real Device directly, download TestFlight with the invitation link.

After Play to Device is installed, open the app in vision pro. You now can see the Port and the IP Address of your device. Copy and paste both of these into the field of the host ip.

Finally, click OK.

Build Applications

To build the application, perform the following steps:

1. Enable Developer Mode

Enable developer mode for Apple Vision Pro

Navigate to: Mac -> Xcode -> Device

Then navigate to: Vision Pro -> Settings -> General -> Remote Devices to pair the AVP with Mac

Finally, open Privacy and turn on Developer Mode.

2. Trust Applications

In Xcode:

- Log out of your developer account.

- Clean build: Command + Shift + Option + K.

- Delete cache ~/Library/Developer/Xcode/DerivedData/ModuleCache (you can use Finder).

- Log back in your developer account.

On Vision Pro:

- Go to Settings -> General -> VPN and Device Management: Delete your previous app and its data.

- RESTART Vision Pro

3. Turn Off Screen Mapping

Finally, turn off Screen Mapping.

If Screen Mapping is not turned off, the app will not be able to uuse the microphone. This is due to the microphone being sampled purely from the mac by screen mirroring.

Project Structure

The project is separated into four additively-loaded Content Scenes, plus the Base Scene responsible for initialization and holding objects that persist throughout the application's life.

The majority of the project's assets are contained within the _LC folder, with assets largely categorized by asset type (Prefabs, Models, Sprites, etc) and then by which scene they are used in (Home, Fortune, Artefact or GameShow).

Scene Structure - Changing Inworld Scenes

It is important to note that in Inworld SDK version 3.2 and onward, a single InworldController component is permanently bound to a single Inworld scene.

This means that to change Inworld scene, you need to destroy the currently active Inworld Controller and create a new one with the Scene ID that you wish to move to preconfigured in the inspector. In this sample project, this is achieved by using additive 'content' scenes that are loaded on top of the base scene.

The base scene contains the following GameObjects:

- Base XR components: This includes the AR Session, XR Origin, Polyspatial VolumeCamera, Gesture Recognition objects and the singleton controller for the touch interaction system.

- InworldConnectionRoutine: This manages the initial load of the application.

- HatSwitcher: This controls switching between the four content scenes based on the placement/removal of Innequin's hats.

- DebugSceneInterface: A set of useful editor buttons to perform common functions difficult to do in the editor while not wearing a headset.

- SceneObjects common to all content scenes: Includes such things as colliders matched to the application volume boundaries, the application UI (excludiong transcript canvases), and the grab handle used to move the application while in unbounded mode.

Upon application start, InworldConnectionRoutine performs several functions to ensure the application starts up correctly and then loads the first content scene. See the class itself for additional details.

Each of the content scenes contains the following GameObjects:

- Main Scene Object: A main scene object which inherits from the GdcScene class. This is the main class responsible for initializing the scene and ensuring that the Inworld Controller and Character have been successfully initialized. These classes also contain the interface layer between the scene logic and Inworld, as well as some other utility methods in some cases connecting the various elements of the scene together.See the GdcScene class for details on how to manage the process of unloading a currently-active InworldController/Character and loading a new one, as the process can be timing-sensitive.

- An InworldController and Character: Note that the InworldCharacter has a DisableOnAwake component. This component has been added to the Script Execution Order settings for the project to ensure that its GameObject is disabled before the InworldCharacter has a chance to initialize (as when the scene first loads, the InworldController hasn't yet been activated). This was done to aid in scene composition. You can instead choose to remove this component and disable the GameObject manually (it will be enabled by the GdcScene GameObject)

- The transcript canvas: This object shows the real-time transcript of the player and innequin's messages and copies its visibility from the input field, as they are shown/hidden together.

- Scene assets and logic: this varies between scenes.

Useful Utility Classes

Several general-purpose components are included in this sample project that can be repurposed in your own projects:

- Gesture Recognition - the classes GestureRecognitionBase, OneHandedGestureRecognizer, and LoveHeartGesture demonstrate how to use the XRHands Unity package to detect complex two-handed gestures within a unified framework as one-handed gesture.

- InworldAsyncUtils: contains several static methods useful for common Inworld interactions that take place over several frames, as well as some shorthand methods to make code more concise (e.g.

InworldAsyncUtils.SendText("hello")rather thanInworldController.Instance.SendText("hello", InworldController.CurrentCharacter.ID)) - InworldCharacterEvents: contains a faster way to gain quick access to specific types of packets coming from the Inworld server. For example if you only want to gain access to

TextPackets you can simply writeInworldCharacterEvents.OnText += m => Debug.Log(m.text.text) - Interaction System: A simple interaction system centered around Polyspatial interactions and magnetic snapping of objects onto target positions is included, contained within the VisionOS directory. To use it, the scene requires a TouchInteractionSystem component. From there objects can be made interactable by including a TouchReceiver component (or a component that inherits from it such as Grabbable). Add a MagneticSnapTarget component to the scene to allow Grabbable objects to be snapped. See RemovableHat for an example of how to override the Grabbable class.

Notes

The following are useful notes to keep in mind when working with the Inworld Vision Pro Module.

Microphone Capture

This project includes Polyspatial microphone input to Inworld.

To achieve this in a stable way, some modifications were made to AudioCapture.cs, which is part of the Inworld SDK. The script works acceptable out of the box, but whenever the application loses focus (e.g. if the user minimizes it to the dock, or if the user interacts with any other bounded-volume application), the unaltered AudioCapture class would never be able to regain microphone access.

The following changes were made:

- An OnAmplitude callback method was added to provide user-feedback on volume changes (executed within the

CalculateAmplitudemethod) - Property

IsRecordingadded, which saves having to add compilation flags to the various parts of the class that check whether we are recording. This property is used to determine whether we need to reinitialize recording when we lose focus.

The most important class used to manage the microphone state is AudioCaptureFocusManager.cs, which automatically enables/disables the AudioCapture component based on the changing focus state of the application.

Texture Preloading

In the current version of Polyspatial (VisionOS 1.1, Polyspatial 1.1.4), scripts which quickly change textures on materials (notably including changing Innequin's expression sprites) sometimes show a magenta 'missing shader' material for a single frame the first time you use a sprite.

To address this, Preloading Scripts have been used to quickly load all necessary sprites on application load.

This is done by assigning textures one frame at a time to a small object in the scene. For an example of this, see The FacePreloader object, found as a child of the InworldConnectionRoutine GameObject in the Start scene. This technique is also used to pre-load the category textures for the LEDs in the game show scene.

Unbounded-mode scene movement

Moving the XROrigin in immersive-mode has no effect on the relative position of the scene objects to the tracking origin. Instead, the VolumeCamera must be moved.

This changes where rendered objects appear relative to the camera, but also applies the inverse transform to all VisionOS-supplied transforms, such as camera position and interaction positions.

As a result, moving and rotating the unbounded volume camera using these interaction positions can become quite complicated. See VolumeGrabHandle.cs for details on how this is done.

Note that the class VisionOsTransforms.cs has been used to provide a more convenient way to transform VisionOS tracking-space transforms for the camera and hand positions into Unity world-space transforms. This is important for things such as having Innequin look at the player or showing custom hand models.